Winning the AI Products Arms Race

Roughly every decade, technology makes a giant leap that erases the old rules and wipes out our assumptions. The Internet. Mobile. Video. Blockchain. Like clockwork, companies and creators begin a mad race to make money off the next big thing, burning through ungodly sums of cash in the process.

Unless you’ve been living off the grid for the past year, it’s clear that the next big thing is artificial intelligence (AI). Early in 2023, ChatGPT is the tool du jour. Spend a few minutes on Twitter and you’ll see people gushing over its ability to write screenplays, debug code, or tell you how to make dairy-free mac and cheese.

Giving anybody with WiFi the ability to churn out unlimited amounts of (mostly) accurate information on demand is a sci-fi level feat. But how can companies leverage the technology behind GPT — and AI in general — to solve substantive problems and expand their product market fit?

That’s the million (or trillion) dollar question.

If you thought leveraging AI was as simple as deploying a chatbot into your app, you may be in for a rude awakening. How, then, do you create an AI product people will actually use?

In this article, we’ll seek to answer that question by digging into:

As we go through each section, a few things will become clear that we think are important takeaways to mention right away:

AI alone can’t disrupt your business.

Early adoption is crucial.

Finding product market fit for AI products requires assessment.

We’ll elaborate as we go.

About the Author

Aniket Deosthali

As the Head of Product, Conversational Commerce, at Walmart, Aniket led the product market fit journey of Walmart’s AI-powered shopping assistant. He’s also an active tech advisor and investor.

Why So Many AI Products Fail

Every time you refresh your newsfeed, it seems like there’s a new AI tech trend or demo with the potential to change the trajectory of humankind (and maybe put you out of business). But when the rubber meets the road, these AI products rarely create end user value — even when they’re built by Silicon Valley royalty.

AI Use Case 1: Amazon Alexa

Consider Amazon Alexa, the personal assistant powered by conversational AI. In 2016, Jeff Bezos mentioned that “the combination of new and better algorithms, vastly superior compute power, and the ability to harness huge amounts of training data… are coming together to solve previously unsolvable problems.”

Fast forward five years and Alexa lost about $10 billion in 2022 alone, making it Amazon’s biggest money loser.

AI Use Case 2: Google Duplex

Then there was Google Duplex: the “near-human” AI chat agent built to handle everyday tasks like checking into flights and booking reservations. Three years later, they shuttered it.

AI Use Case 3: Autonomous Driving

And what about those self-driving cars we had such high hopes for? If Ford shutting down their autonomous driving technology is any indication, you’re still going to have to buckle into the driver’s seat for the foreseeable future.

The unfortunate reality is that 85% of enterprise big data and AI projects fail.

What’s going on here? If brands with thousands of employees and billions of dollars can’t hit a home run with an AI product, how will you?

The issue isn’t the raw technology. Some of the stuff you can do with AI is nothing short of magical, and it’s only getting better. The issue is figuring out how to *apply* AI in the right situations at the right time on the right channels — and turning a profit in the process.

In other words: finding product market fit for AI products.

It’s tempting to press the panic button when experts warn that AI will disrupt virtually every industry, from healthcare to law to even the arts. But there’s a key nuance that’s missing amidst the noise.

AI Alone Can’t Disrupt: The Machine Learning Phases

AI software like ChatGPT, BERT, and LaMBDA can’t cut into your market share — much less replace you — on its own. However, a competitor that leverages these AI tools to scale its operations can definitely disrupt you.

To understand why, let’s break down the two main phases of machine learning: training and inference.

Machine Learning Training is the process of feeding a model curated data so it can “learn” and build models. For example, human linguists teach Grammarly’s AI by feeding it high-quality data on what proper comma usage looks like.

Machine Learning Inference is the process of using a pre-trained algorithm to answer questions in real-time. For example, Grammarly makes suggestions to make users’ writing crisp and grammatically correct.

The better the data you feed the machine, the better the output will be. Accordingly, companies who have goldmines of data (and users constantly generating more of it) have an advantage over startups building AI capabilities from scratch. For example, Talkspace is best positioned to use its data to create a mental health bot and Noom is to create a health coach.

As Chamath Palihapitiya pointed out on the All-In Podcast, single-use AI models will become commoditized:

“The real value is finding non-obvious sources of data that feed it… that’s the real arms race.”

AI case in point: Meta’s ad targeting is superior to their competitors’ because they have pixels everywhere, giving them access to the best data, resulting in a quarter of the Internet’s ad revenues. In other words, it’s not about who has the blueprint — it’s about who owns the land.

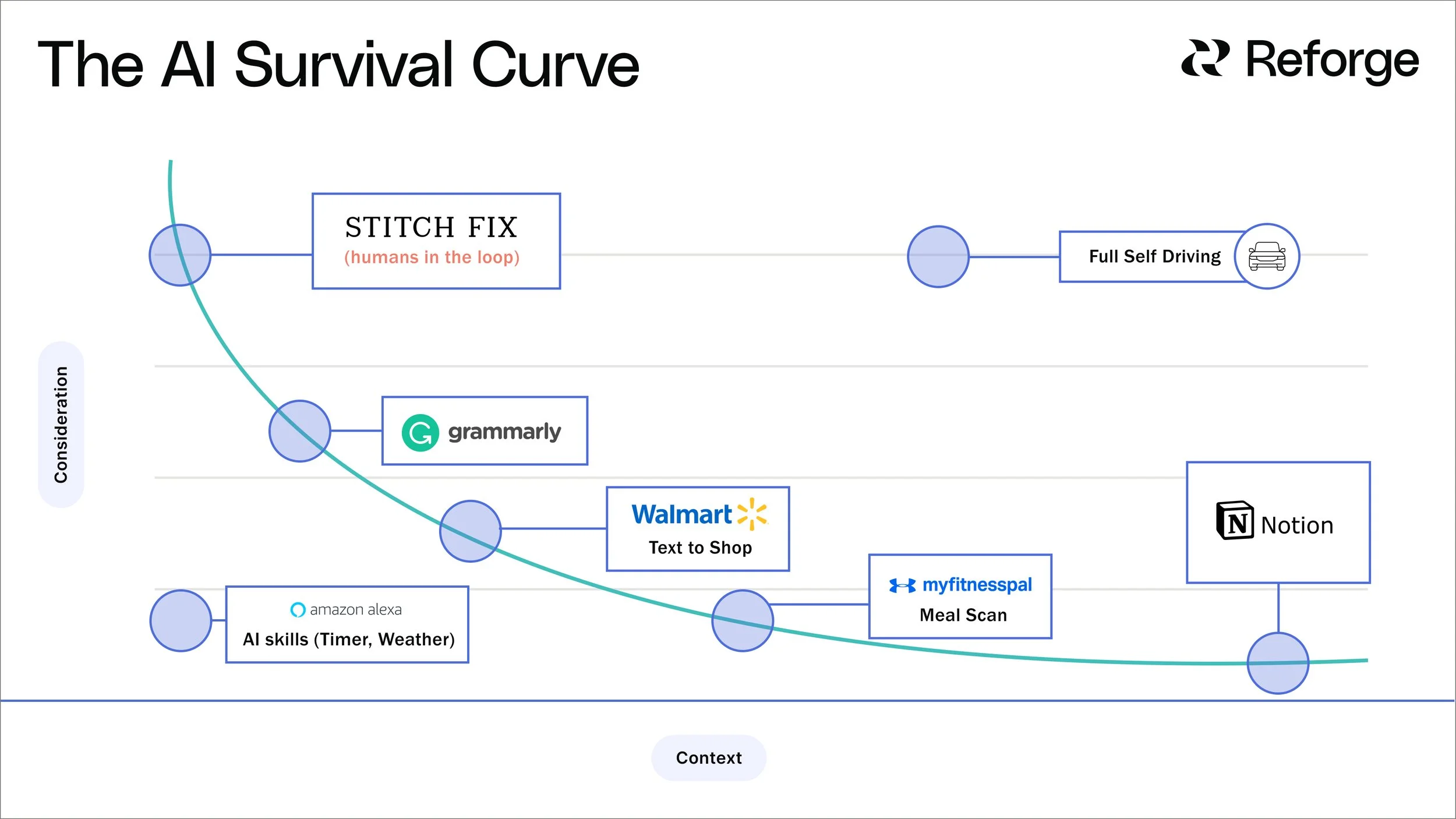

The AI Survival Curve: Where the Lucrative Opportunities Lie

The most efficient way to evaluate AI opportunities and unlock the advantages of AI is by using the Consideration x Context framework. Let’s start with some baseline definitions.

Y-Axis = Consideration: The amount of effort required to make a decision.

The more thought you put into a decision, the higher consideration it is. For example, choosing a dish detergent is “low consideration” for most shoppers, compared to buying a car which is “high consideration.” Consideration can be represented as a function of the number of compelling alternatives and the stakes - users’ tolerance for errors, for example.

X-Axis = Context: The volume of abstract concepts AI needs to know.

Context refers to how many abstract concepts a model needs to know in order to provide a useful response. Does it only need to understand a small batch of data points (like a product catalog), or does it need to understand the entire internet (like ChatGPT)?

AI Examples in Business

As you can see, successful AI products fall neatly on a curve, which we’ll call the “Survival Curve.” Let’s take a closer look at where various AI products fall along this curve with a few examples.

StitchFix: Their model uses AI to augment humans to help you find the perfect outfits. This requires a lot of thought (high consideration), but the options are limited to StichFix’s fashion vertical, rather than an entire shopping mall (low context).

Grammarly: This AI-powered writing assistant has moderate stakes because it can be a huge help for people who write for a living. The context is also moderate considering it has to be trained on proper grammar, but not every piece of content ever published.

Walmart Text to Shop: This tool helps users automate household shopping. The stakes aren’t quite as high here, but it does require more context with Walmart’s vast e-commerce and in-store assortment of 1.5+ million SKUs.

MyFitnessPal Meal Scan: This feature tells people how many calories they’re consuming by simply scanning what’s on their plate. A picture is worth a thousand words, but here a picture is worth millions of data points. Accordingly, it’s a high-context feature, but low stakes since the consequences are minimal for being wrong.

NotionAI: This GPT3-powered tool requires more training than anything else — the whole internet! However, the stakes are low; you can always brainstorm and write on your own, and there aren’t any compelling alternatives.

Fully self-driving vehicles: This is way above the curve in the top right quadrant because it requires an ungodly amount of training, combined with high stakes to be “right” 100% of the time.

Amazon Alexa: This falls below the curve because most of the use cases are low stakes (checking the weather, turning down the volume) and low context (it can order Domino’s Pizza, but it can’t order you a pie from the best deep dish spot in Seattle).

The AI Survival Curve tells us whether an AI-based solution stands to solve the needs of your users today. As the stakes and optionality increase, models need more human attention to consistently find the best answer.

AI products that achieve product market fit do so by focusing on high ROI use cases that ensure the cost of context doesn’t increase beyond the point of what’s shippable to their users. This sets them up to start the virtuous cycle of data collection and expand their product benefit as technology evolves.

The earlier you start this process, the better. Otherwise, you might get left in the (digital) dust.

Let’s dig deeper into why it’s important to invest in AI early.

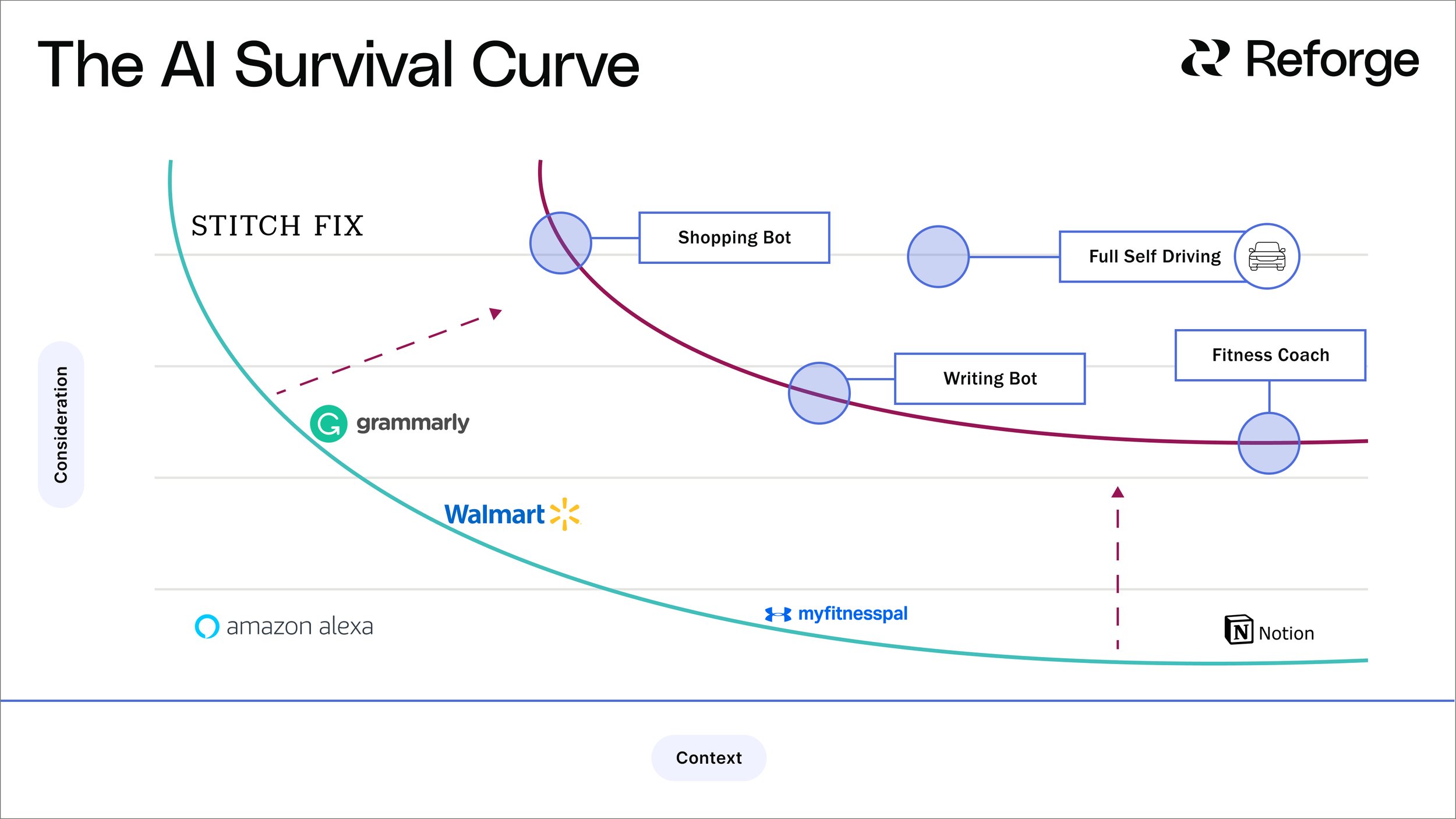

Secure Your Future: Invest in AI Early

As AI progresses, the Survival Curve will shift outward. The applications we think of as “mind-blowing” today may be considered obsolete by 2030. GPT3 is already dropping jaws, but GPT4 will be trained on 1,000x more data points — imagine what GPT30 will be like.

This shift will take time, perhaps decades. But the worst thing a company can do right now is tune out the noise and pretend AI is just a flash in the pan. Just like companies that embraced digital had the easiest transition to mobile, companies that embrace AI early will be prepared for more advanced use cases down the road.

It’s upon leaders to identify opportunities along today’s efficient frontier so they aren’t left behind as technology matures. But, of course, it’s not all smooth sailing.

Overcoming the Disadvantages of AI

To further secure your future in AI, it’s important to understand its limitations so that you can overcome them advantageously.

Today’s Biggest Disadvantage of AI: Truth

Right now, AI’s achilles heel is Truth, meaning its biggest limitation is that it doesn’t know what is True. We’re using a capital “T” because there’s a big difference between correctly naming the capital of Botswana and correctly predicting the demand for laundry detergent in 2030.

Last May, Cornell University ran a study to assess how accurately AI language models answered 817 questions spanning health, law, finance, and politics. The best model was truthful on just 58% of questions, compared to 94% for humans.

That doesn’t mean AI is “bad.” On the contrary, it’s doing exactly what it’s supposed to: mimic whatever data engineers feed it. In this case, the model was trained on data that includes popular misconceptions with the potential to deceive humans.

AI’s Truth gap has prompted many leaders to pump the brakes on blindly adopting it. For example, the coding Q&A site Stack Overflow banned users from sharing answers generated by ChatGPT.

“The primary problem is that while the answers which ChatGPT produces have a high rate of being incorrect, they typically look like they might be good and the answers are very easy to produce.”

Prioritize Truth and Training to Take Advantage of AI

If being right matters — and it does if you want your business to survive — one solution is to take a page from Google’s book, and focus on a specific problem domain. With Pathways, Google achieved accuracy approaching the levels of humans (and quickly) by narrowing the use case (to medicine) and curating the quality of the training data. While Google’s model was less ambitious than ChatGPT, it’s better suited to create enterprise value.

Bottom line: Enterprise companies should focus less on absolute accuracy and more on closing the accuracy gap between AI and humans. That’s where training comes in — training yields truth.

Using AI to write an essay or get a pineapple smoothie recipe is nifty. However, the next big leap is earning users’ trust with complex, high-stakes problems — and the only way to earn that level of trust is through training.

There’s a reason most of us prefer talking to a real person instead of a robot, whether we’re canceling a subscription or picking out a new pair of jeans. Humans have a combination of two types of intelligence:

Fluid intelligence: the ability to think abstractly to solve new problems

Crystallized intelligence: the understanding of what ‘good looks like’ that comes from prior learning and experiences

Most AI language models have amazing fluid intelligence, but struggle with crystalized intelligence. For example, ChatGPT can write a screenplay about talking sharks in the style of Quentin Tarantino, but it can’t fake the experience of being in writers rooms for 20+ years.

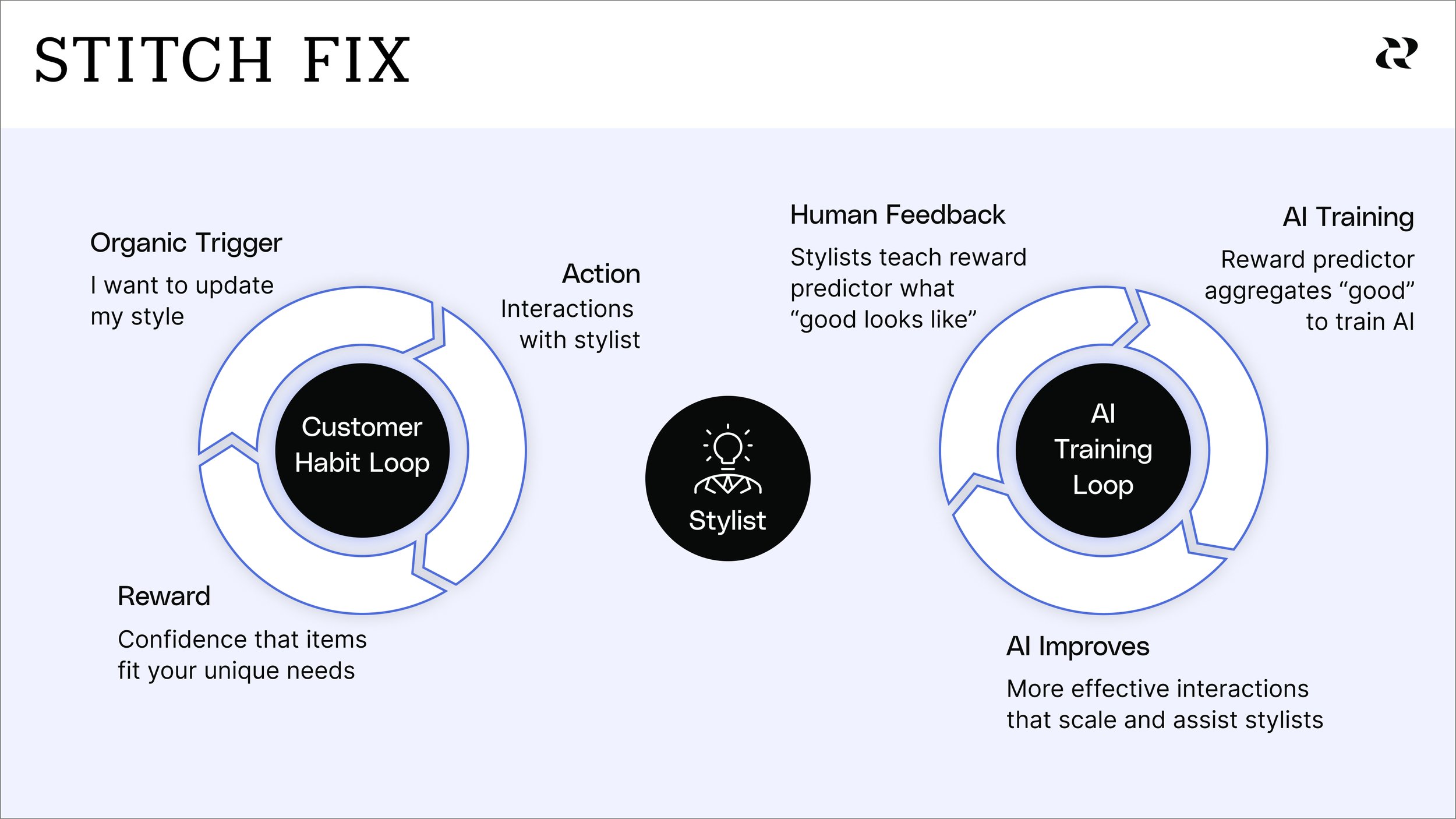

Reaching the point where we can trust AI to solve substantive problems requires a hybrid model where humans work in tandem with AI, continually teaching it what “good” looks like. That could mean feeding the system 10,000 hours of customer service calls or aggregating 20 years’ worth of patient data to predict health issues.

In this sense, you can think of AI as your intern rather than your boss; the higher your consideration is, the more guidance your “intern” will need.

Factoring Trust Into the Survival Curve

Let’s tie trust back into the consideration axis of our Survival Curve.

High Consideration AI Use Cases

For mid-to-high-consideration use cases, it’s all about leveraging human talent to train AI until customers trust the tool to help them make high-stakes decisions. Imagine a Best Buy “super sales bot” that could consolidate the skills and knowledge of the best sales reps to help customers choose their TV, computer, or sound system.

This is represented below as a self-reinforcing loop where trust begets engagement as a virtuous cycle. The stylist plays a critical role as the “human in the loop,” enabling the core customer habit loop while also training the AI reward predictor. This model aggregates stylists’ crystallized fashionista experience to train a “super stylist” via a process called Reinforcement Learning from Human Feedback (RLHF), the same technique behind ChatGPT.

Low Consideration AI Use Cases

When it comes to low-consideration use cases, however, the human in the loop is the customer. The goal is to automate key steps in the user journey while nailing distribution to wherever the user’s attention is. The more you can automate, the better. However, total automation isn’t necessary; clever UX goes a long way.

For example, my team launched Walmart’s text shopping assistant with a feature called proactive reorder. Users receive a text with a list of items their household needed for the week, tweak it with a few taps, and check out within seconds. This collapses the otherwise tedious process of grocery shopping via an e-commerce funnel into a seamless experience that results in a smarter algorithm with every interaction.

Walmart’s personalized Text Shopping Assistant makes Thanksgiving a breeze

Now that we have a clearer idea of where the AI opportunities are, let’s get to building.

Expanding your products’ value with AI can be daunting. The recent gold rush has prompted countless teams to slap together AI products that don’t solve problems, don’t make money, or both.

Let’s break down a five-step process for de-risking AI products and putting the odds of product market fit in your favor.

Step 1: Define and Prioritize Your Use Cases

A product is a bundle of features that satisfies a use case: which refers to the core reason(s) people choose your product. Use cases are comprised of five questions:

What problem are you solving?

Who uses your product?

Why do they use it?

What’s the alternative?

How often do they use it?

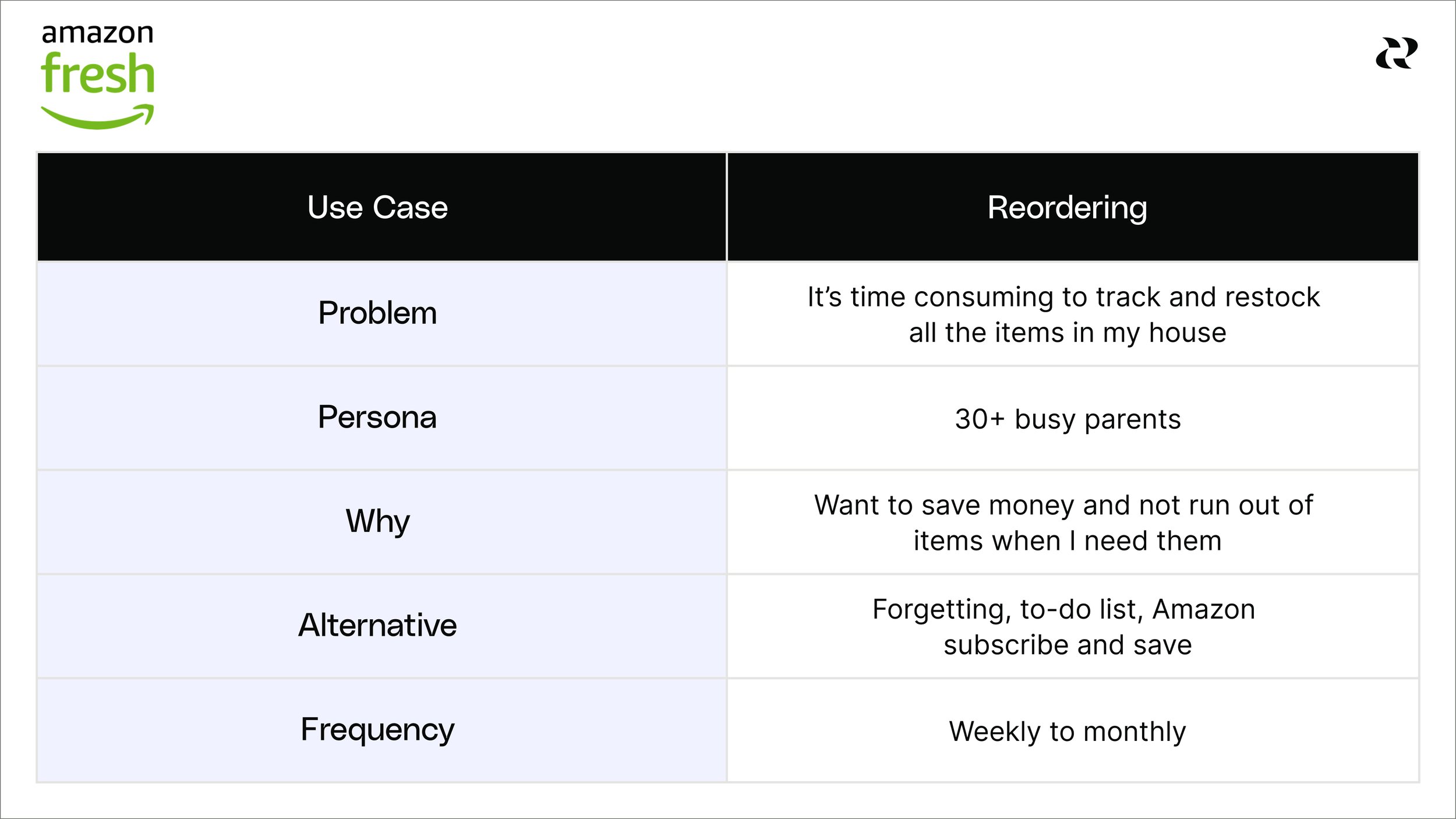

Let’s take a product team at Amazon Fresh as an example: the team identifies through user research that customers spend an inordinate amount of time restocking the same items in their households, summarized by the ‘Restocking’ use case below.

Most products have multiple use cases. But when it comes to scaling with AI, prioritization of use cases based on what is the most frequent and most important for users is a proven framework to address the cold start problem that holds AI models back from being sufficiently “warmed up” with quality data training needed to offer good results back to users.

Step 2: Formulate a 10x Product Hypothesis

Once you’ve prioritized your use case(s), consider how you might leverage AI to drive a step change improvement. Here are some categories of AI-enabled value propositions:

Proactivity: Can you anticipate customer needs?

Personalization: Can you serve relevant information?

Personality: Can you inject life into brand interactions?

Automation: Can you collapse time and reduce effort?

Accessibility: Can you enable seamless, multi-modal interactions?

The goal is to identify solutions that will be significantly — not incrementally — better than the status quo in order to overcome the switching costs needed to facilitate habit formation.

The Amazon team posits a couple of hypotheses for the restocking use case:

“We believe that subscription retention will be 100% increased if

…users can have the convenience and cost-savings of subscribing without feeling like they will lose control and end up with too many items.

…users are proactively prompted to subscribe to their frequently purchased items with a one year projection of cost savings.”

Step 3: Check Your Riskiest Assumptions

Before you build anything, it’s essential to gather user feedback on your product hypothesis. This should be a blend of customer insights (qualitative data) and actual customer behavior (quantitative data). Together, these give you a sense of whether your idea can solve a real problem for real people.

AI Use Case: Amazon Fresh

Continuing with our Amazon Fresh example, the team assumes that a predictive subscription feature will increase revenue and make customers’ lives easier. But the team needs to validate those assumptions first. Their research process confirms that most people hesitate to put their laundry detergent order on autopilot, otherwise they might end up with too much.

AI products have special considerations across the three types of risk:

Customer risk: Are you solving a real problem?

Does the feature present a significantly better solution or is it a cool thing to do technically?

Is there potential for bias to impact certain users’ experiences?

Business risk: Will this be profitable?

Does the feature build on and enhance your organization’s proprietary datasets?

Are you prepared for the up-front and on-going costs of machine learning operations (annotation and retraining)?

Tech risk: Is this feasible in terms of software and hardware?

Do you have a broad enough set of training data for the model inference?

Are you able to offer predictive performance based on the needs of your use case?

Step 4: Validate Your Bets with Rapid Prototyping

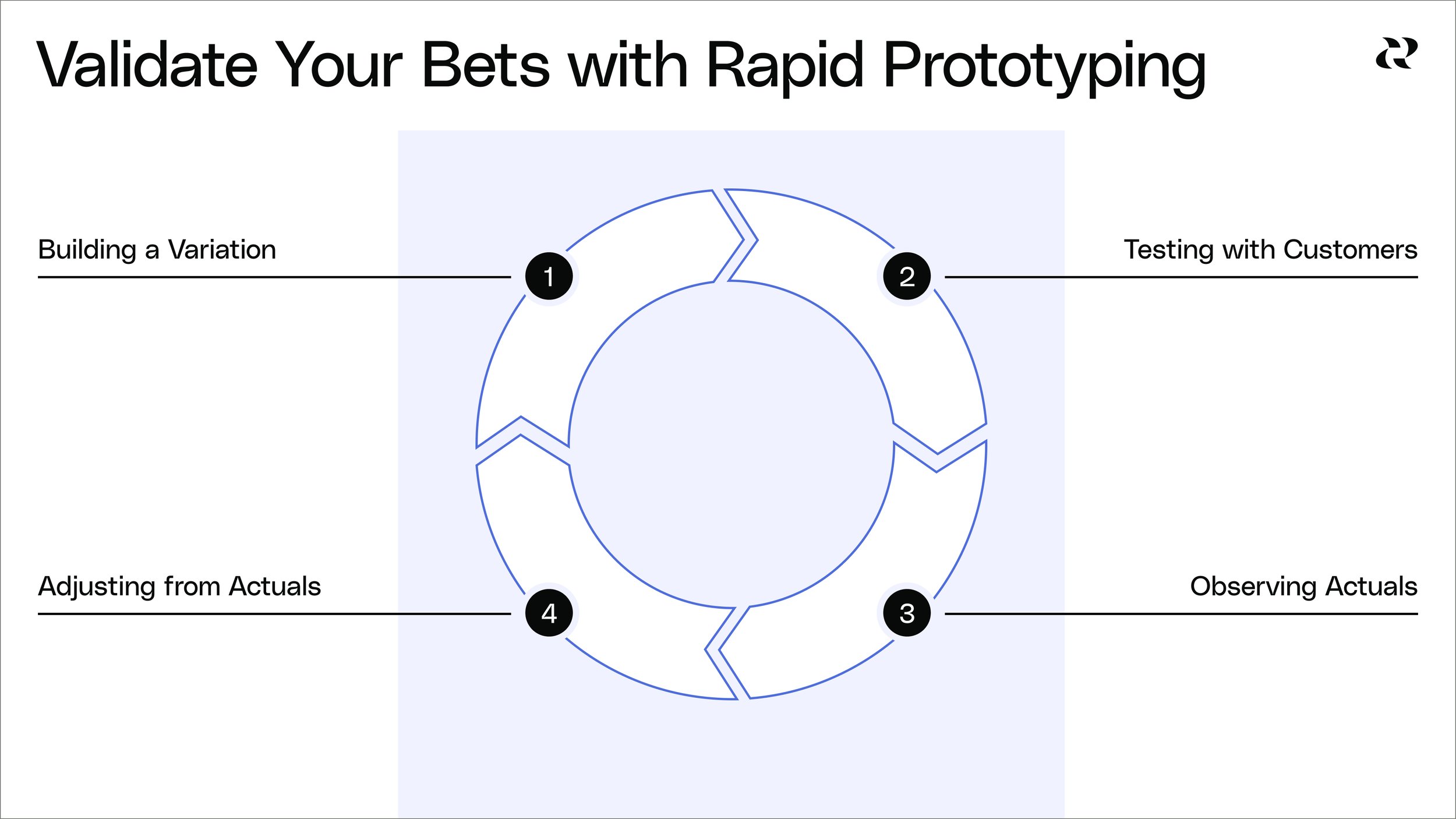

It’s time to actualize your idea with a prototype, or minimum viable product. You’re not preparing for the front cover of The New York Times here — you just need to find the fastest path to get “actuals,” or learnings from human behavior in the real world. That means getting scrappy and creating the shortest possible learning loop possible, this is usually accomplished by doing things that don’t scale.

Rinse and repeat until you start converging on what Tom Chi calls “eyes light up moments,” those moments when users truly feel the delight associated with the core value of your product. Think of how you felt the first time that you got DoorDash delivered during a stormy day.

In the case of Amazon Fresh, we could prototype a subscription bot with a few agents who would work with a small group of pilot customers who represent the early adopter persona we identified in step one.

From here, the team comes away with two key learnings:

37% of users in the pilot group had a delighter moment when the bot double checked with them before shipping out an item.

21% of users opt-in when the bot prompts them if they want to subscribe to an item they buy frequently.

The team is well on its way to developing product user fit, a sharp understanding of having the right product for the right user, all without setting up complex AI infrastructure.

Step 5: Launch Your Minimum Lovable Product

Minimum Lovable Product (MLP) is a term Amazon coined for a product with just enough features for early adopters to adore it, not just tolerate it. This stands in contrast to a minimum *viable* product, which requires the least amount of effort to gather learnings.

A couple of decades ago, building an MLP required a ton of resources and time. Today, however, product development teams can launch MLPs in a fraction of the time with off-the-shelf tools like Quiq, spaCy, and Labelbox.

AI Use Case: Notion AI

Note-taking company Notion AI integrated GPT-3 to help users streamline otherwise tedious tasks like creating meeting agendas and writing job descriptions — all within the Notion platform that users know and love.

AI Use Case: Amazon Fresh

Wrapping up our Amazon example — the prototyping stage generated key learnings on what a fully automated MLP needs to look like for a specific use case. Based on this, Amazon can build a MLP leveraging a SaaS offering (such as AWS Lex) to launch scaled testing of their experience. This kickstarts the processes of training and data collection so the team can compound product improvements over time.

Building an incredible AI product or experience is non-negotiable for product market fit.

Final Thoughts: Integrate Your AI Product

The difference between AI and other paradigm-shifting innovations is that there’s an incredibly low barrier to entry. In the early days of the internet, building a website was an arduous task that only large tech teams could handle. Contrast this with AI, where virtually anyone with an internet connection can build applications with open-source models.

This is great from an accessibility standpoint. But as Allen Cheng points out, product teams benefit from “moats” that create competitive advantages, and resources like OpenAI turn moats into shallow puddles. Accordingly, the real value lies in integrating AI into existing systems to augment them — not deploying a bot and calling it a day.

We covered a lot in this article, so let’s zoom out and summarize the key takeaways we first introduced at the top — now with all the context.

AI alone can’t disrupt your business, but your competitors who leverage AI definitely can.

AI will evolve exponentially every year, so early adoption is crucial.

Finding product market fit for AI products requires assessing where your product falls on the Survival Curve.

Interested in additional thought leadership written for product leaders? Subscribe to Reforge for a weekly dose.